Table of Contents

Computational Biology

BIOSC 1540

Fall 2024 • University of Pittsburgh • Department of Biological Sciences

This course offers a systematic examination of foundational concepts and an in-depth understanding of the significant fields of computational biology. Students learn about genomics, transcriptomics, computer-aided drug design, and molecular simulations in the context of current research applications. This course is the first stepping stone for undergraduates at the University of Pittsburgh before taking Computational Genomics (BIOSC 1542) or Simulation and Modeling (BIOSC 1544).

Contributions¶

All comments, questions, concerns, feedback, or suggestions are welcome!

License¶

Code contained in this project is released under the GPLv3 license as specified in LICENSE.md.

All other data, information, documentation, and associated content provided within this project are released under the CC BY-NC-SA 4.0 license as specified in LICENSE_INFO.md.

Why are we using copyleft licenses? Many people have dedicated countless hours to producing high-quality materials incorporated into this website. We want to ensure that students maintain access to these materials.

Web analytics¶

Why would we want to track website traffic?

An instructor can gain insights into how students engage with online teaching materials by analyzing web analytics. This information is instrumental in assessing the effectiveness of the materials. Web analytics reveal the popularity of specific topics or sections among students and empower instructors to tailor future lectures or discussions. Analytics also provides valuable data for curriculum development, helping instructors identify trends, strengths, and weaknesses in course materials. Additionally, instructors may leverage web analytics as evidence of their commitment to continuous improvement in teaching methods, which is helpful in discussions related to professional development, promotions, or tenure.

We track website traffic using plausible, which is privacy-friendly, uses no cookies, and is compliant with GDPR, CCPA, and PECR.

Syllabus ↵

Syllabus¶

Semester: Fall 2024

Meeting time: Tuesdays and Thursdays from 4:00 - 5:15 pm.

Location: 1501 Posvar

Instructor: Alex Maldonado, PhD (he/him/his)

Email: alex.maldonado@pitt.edu

Office hours:

| Day | Time | Location |

|---|---|---|

| Tuesday | 11:30 am - 12:30 pm | 102 Clapp Hall |

| Thursday | 11:30 am - 12:30 pm | 315 Clapp Hall |

Catalog description¶

This course gives students a broad understanding of how computational approaches can solve problems in biology. We will also explore the biological and computational underpinnings of the methods.

Note

The catalog course description mandates what material this course has to cover. How the material is covered is at the discretion of the instructor.

Prerequisites¶

To enroll, you must have received a minimum grade of C in Foundations of Biology 2 (BIOSC 0160, 0165, or 0716).

Programming experience

In contrast to previous semesters, no programming will be necessary for the successful completion of this course.

Course philosophy¶

The Computational Biology course explores how to gain insight into biological phenomena with computational methodologies. My teaching philosophy that guides this course is below.

Critical thinking is paramount¶

Critical thinking and problem-solving are essential in education, especially in computational biology. My teaching approach is centered around engaging students in real-world scenarios and challenges. This requires them to apply, analyze, and synthesize information and helps them understand the practical application of computational biology, moving away from rote memorization. This approach fosters a deep understanding of the subject matter, encouraging students to explore the complexities and interconnectedness of biological systems through computational methods. By encouraging inquiry, debate, and collaboration, I aim to equip students with the skills necessary to navigate and contribute to the ever-evolving landscape of computational biology. The goal is not just to impart knowledge but to cultivate innovative thinkers and problem solvers who are prepared to tackle the challenges of the future.

Unlike traditional biology courses such as foundations of biology or biochemistry, this computational biology class stands out with its unique learning approach, resembling a computer science course. While the problems and concepts explored may have roots in similar biological foundations, the course's computational nature demands a distinct set of skills and mindsets. As part of this course, students will be expected to take the initiative in their learning and problem-solving. They will encounter open-ended challenges that transcend the boundaries of a single discipline, fostering their ability to think critically and independently. This course aims to foster a spirit of independent inquiry and adaptability, preparing students to thrive in the multidisciplinary and rapidly evolving field of computational biology.

Info

In traditional biology courses, such as foundations of biology or biochemistry, students often learn through rote memorization of facts, concepts, and processes. For example, students might be asked to memorize the steps of DNA replication or the names and functions of various enzymes involved in cellular metabolism. While this knowledge is essential, it only sometimes challenges students to apply their understanding to novel situations or develop problem-solving skills.

In contrast, a computational biology course focuses on problem-based and open-ended learning, which is more akin to the approach in computer science courses. Students are presented with complex, real-world biological problems that require them to apply their knowledge innovatively and develop their own computational solutions. For instance, students might be asked to analyze large genomic datasets to identify patterns or anomalies, develop algorithms to predict protein structures, or create models to simulate the spread of infectious diseases.

These open-ended problems necessitate a deep understanding of biological concepts and the ability to translate that knowledge into computational frameworks. Students must think critically, break complex problems into manageable components, and develop creative solutions. They often need to engage in self-driven learning, exploring new computational tools and techniques to tackle the challenges.

A computational biology course emphasizes problem-based and open-ended learning, cultivating essential critical thinking, creativity, and adaptability skills. These skills prepare students for careers in computational biology and equip them with the tools to tackle the novel challenges they will face in any scientific field.

Learning happens outside your comfort zone¶

This computational biology course embraces the idea that authentic learning and growth often occur when one steps outside one's comfort zone. This approach is particularly relevant in a field that combines biology's complexities with computational methods' challenges. As you navigate this course, you will encounter concepts, problems, and methodologies that may initially seem daunting or unfamiliar. This is intentional. By pushing the boundaries of your knowledge and skills, you develop resilience, adaptability, and a deeper understanding of the subject matter.

Rest assured that your willingness to step outside your comfort zone will not negatively affect your grade. We have meticulously structured the course to support your growth while ensuring fair and transparent assessment methods.

- Most assignments employ a scaffolded or tiered approach, where more straightforward questions that test foundational knowledge are worth more points than the more challenging, advanced questions.

- Optional "stretch" questions or projects for those seeking extra challenges, with bonus points that can't negatively affect your grade.

These strategies are designed to create a nurturing and supportive learning environment where you can push your boundaries, take intellectual risks, and grow your skills in computational biology without fear of academic consequences.

Real-world scenarios enhance learning¶

As we delve into computational biology, I want to emphasize the practical relevance of each module. Instead of a traditional approach, we'll use motivating real-world scenarios that underscore the importance of the concepts you'll be learning. Consider yourself a problem solver in a scientific expedition, applying computational tools to tackle actual challenges faced in the field. Our focus will be on tangible applications, from predicting the impact of genetic variations on disease susceptibility to simulating the dynamics of biological systems under different conditions. This approach ensures that what you learn in this course is not confined to theoretical frameworks but has direct implications for understanding and addressing complex biological phenomena.

Connecting you to opportunities¶

Embarking on a journey in computational biology is most effectively achieved by actively participating in a research lab at Pitt. This hands-on experience enriches your understanding and provides a practical immersion; computational biology research is becoming an expectation to remain competitive in today's job market. The modules in this course integrate seamlessly with this approach, offering direct links to labs that leverage tools relevant to the specific content covered. You should contact these labs to see if they have paid or for-credit research positions available.

Single source of truth¶

Each course should have a single source of truth (SSOT), which is having a single authoritative source of data and information across the course. Having an SSOT can help you access all the information related to the course in one place. SSOT can help reduce confusion and ensure everyone is on the same page. For this course, the SSOT is pitt-biosc1540-2024f.oasci.org.

Another benefit of having an SSOT website is that it can help keep the course content up-to-date and accurate. Your instructor can change the website as needed; you will always have access to the most current information. This can help ensure you learn the most relevant and up-to-date material. Finally, having an SSOT website can help improve communication between your instructor and the students. Your instructor can share important announcements, assignments, and other course-related information on the website. This can help ensure everyone is informed and up-to-date on what's happening in the course.

Everything is a draft¶

Open science and education are my core values and extend to my teaching. You will likely see me working on things I assign in an hour or next week. Teaching new courses or making significant changes always works like this. Thus, looking ahead is acceptable, and all drafts are marked with the following admonition. If you start working ahead and things change, your time is lost.

DRAFT

This page is a work in progress and is subject to change at any moment.

I will do my best to avoid mistakes, but they can happen. If you see grammar or spelling issues or need clarification on passages that don't have the DRAFT admonition, feel free to tell me so I can correct them.

Outcomes¶

There are only two required courses in the Bachelor's degree in computational biology where learning computational biology is the focus. After this course, students must take computational genomics (BIOSC 1542) or simulation and modeling (BIOSC 1544). Outcomes for this course are geared towards introducing students to both subfields.

- Understand the fundamental concepts and methodologies in genomics, transcriptomics, and computational structural biology.

- Recognize the applications of computational methods in various biological fields.

- Critically evaluate the strengths and limitations of different computational approaches in biology.

- Interpret and analyze results from various computational biology methods.

- Demonstrate enhanced problem-solving and critical thinking skills in the context of computational biology.

Schedule¶

We start with bioinformatics to learn fundamentals of genomics and transcriptomics. Afterwards, we will cover structure prediction, molecular simulations, and computer-aided drug design in our computational structural biology module. Our third module in scientific python will introduce how we can use Python in computational biology.

Module 01 - Bioinformatics¶

Week 1¶

Tuesday (Aug 27) Lecture 01

- Topics: Introduction to computational biology.

Thursday (Aug 29) Lecture 02

- Topics: DNA sequencing technologies.

Week 2¶

Tuesday (Sep 3) Lecture 03

- Topics: Quality control.

Thursday (Sep 5) Lecture 04

- Topics: De novo genome assembly.

Week 3¶

Tuesday (Sep 10) Lecture 05

- Topics: Gene annotation.

Thursday (Sep 12) Lecture 06

- Topics: Sequence alignment.

Week 4¶

Tuesday (Sep 17) Lecture 07

- Topics: Introduction to transcriptomics.

Thursday (Sep 19) Lecture 08

- Topics: Read mapping.

Week 5¶

Tuesday (Sep 24) Lecture 09

- Topics: Gene expression quantification.

Thursday (Sep 26) Lecture 10

- Topics: Differential gene expression.

Week 6¶

Tuesday (Oct 1)

- Topics: Review

Thursday (Oct 3)

- Topics: Exam 1

Module 02 - Computational structural biology¶

Week 7¶

Tuesday (Oct 8) Lecture 11

- Topics: Structural biology and determination.

Thursday (Oct 10) Lecture 12

- Topics: Protein structure prediction.

Week 8¶

Tuesday (Oct 15) Fall break (no class)

Thursday (Oct 17) Lecture 13

- Topics: Molecular simulation principles.

Week 9¶

Tuesday (Oct 22) Lecture 14

- Topics: Molecular system representations.

Thursday (Oct 24) Lecture 15

- Topics: Gaining atomistic insights.

Week 10¶

Tuesday (Oct 29) Lecture 16

- Topics: Structure-based drug design.

Thursday (Oct 31) Lecture 17

- Topics: Docking and virtual screening.

Week 11¶

Tuesday (Nov 5) Election day: Go vote (no class)

Thursday (Nov 7) Lecture 18

- Topics: Ligand-based drug design.

Week 12¶

Tuesday (Nov 12) Review

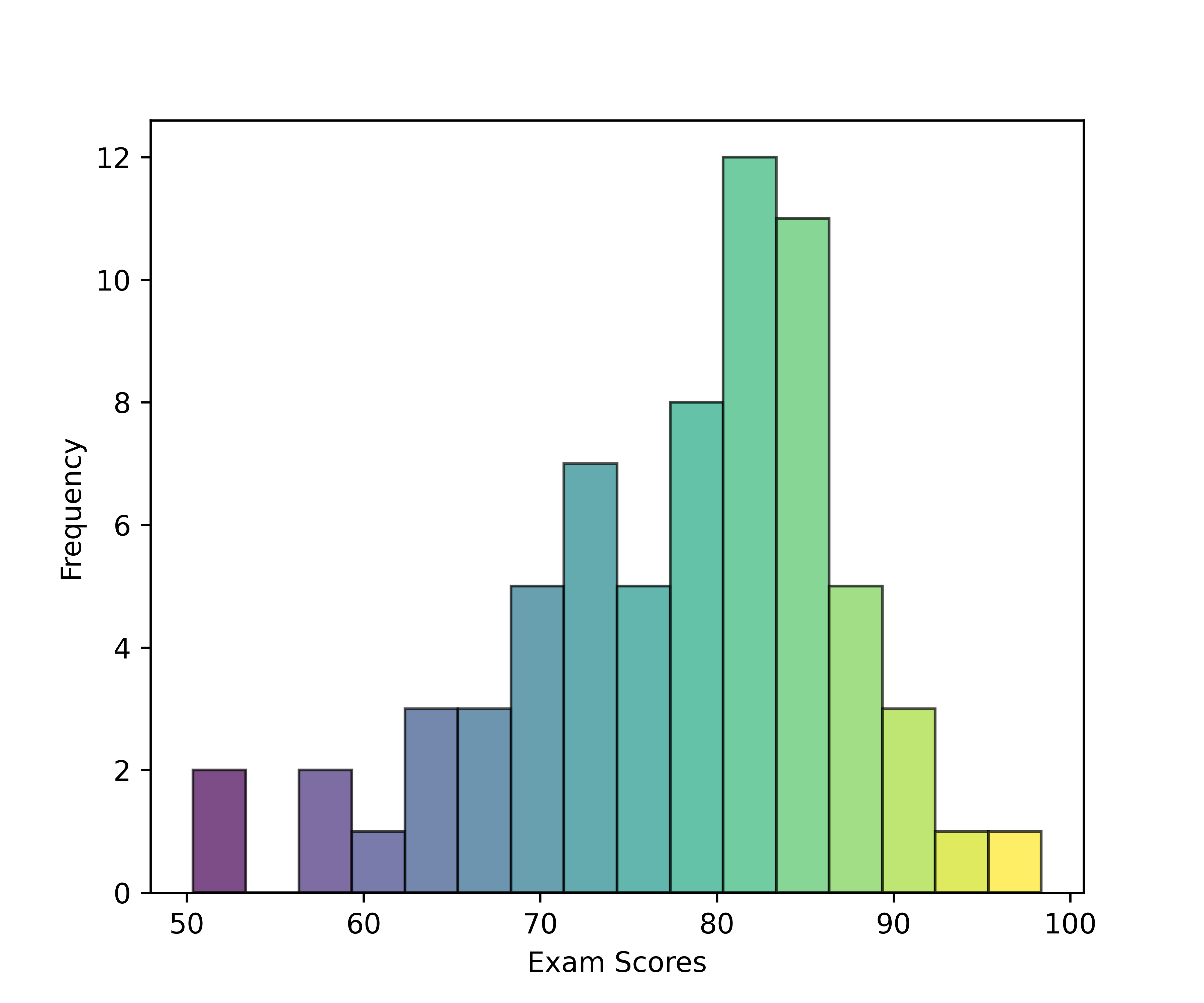

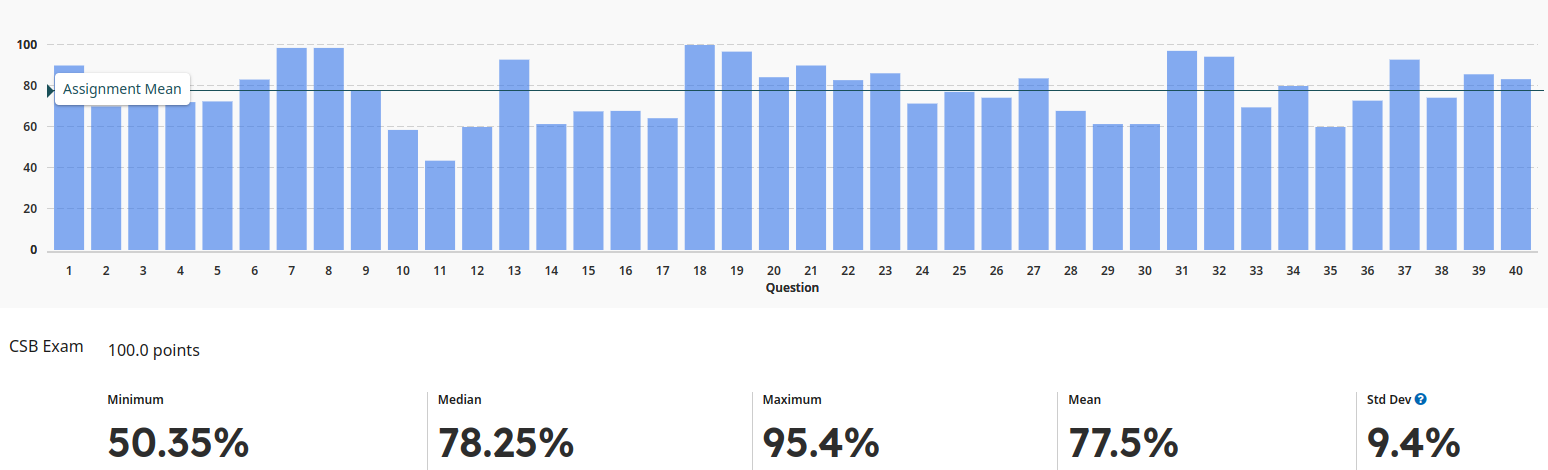

Thursday (Nov 14): Exam 2

Module 03 - Scientific python¶

These are optional lectures that will not negatively affect your final grade.

Week 13¶

Tuesday (Nov 19) Lecture 19

- Topics: Python basics

Thursday (Nov 21) Lecture 20

- Topics: Arrays and plotting

Thanksgiving break¶

No class on Nov 26 and 28.

Week 15¶

Tuesday (Dec 3) Lecture 21

- Topics: Predictive modeling

Thursday (Dec 5) Lecture 22

- Topics: Project work

Week 16¶

Tuesday (Dec 10) Lecture 23

- Topics: Project work

Final¶

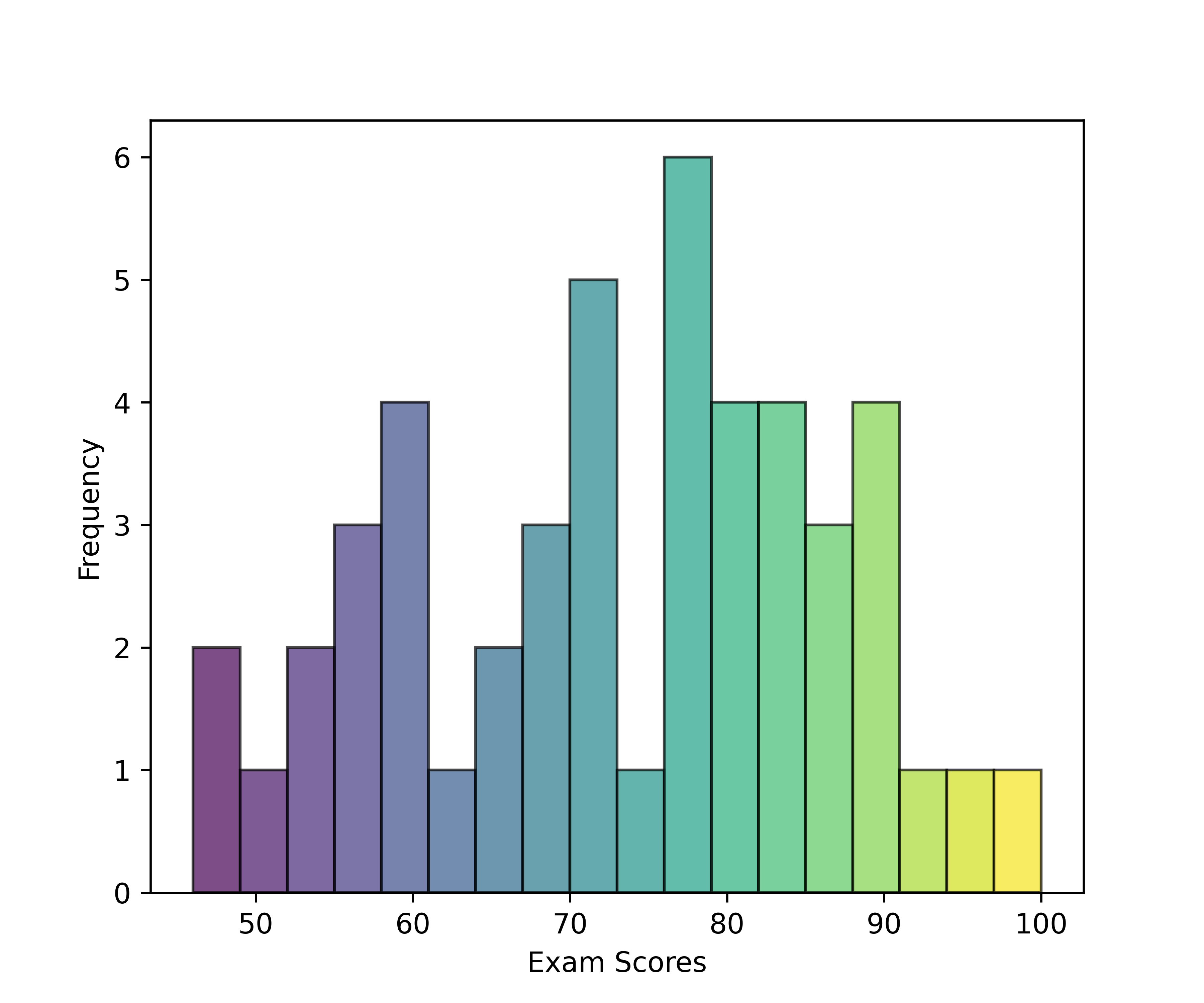

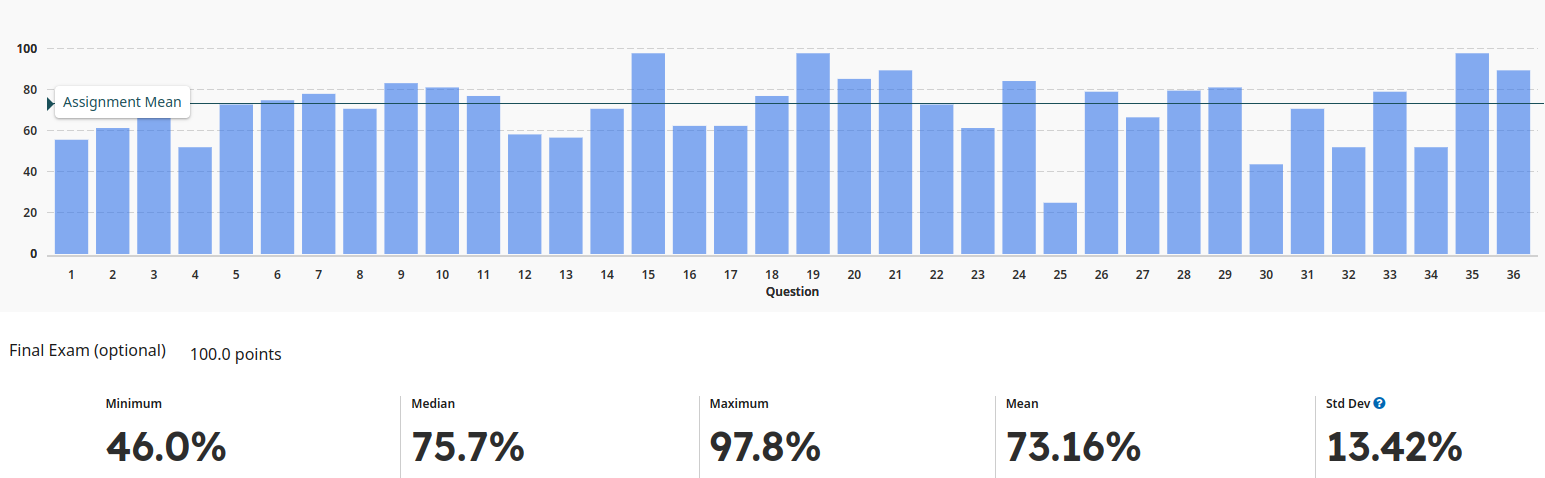

Monday (Dec 16) Final exam

- An optional, cumulative final exam will be offered to replace lowest exam grade.

Assessments¶

Distribution¶

The course will have the following point distribution.

- Exams (35%)

- Project (30%)

- Assignments (35%)

Late assignments and extensions¶

I am mindful of the diverse nature of deadlines, particularly in the scientific realm. Some are set in stone, while others exhibit more flexibility. It is noteworthy that the scientific community frequently submits manuscripts and reviews days, weeks, or months after the editor's request. Such practices are widely understood. Conversely, submitting a grant application even a minute past the deadline makes it ineligible for review.

I will use the following late assignment and extension policy. It encourages timely submissions while acknowledging the influence of external commitments and unforeseen circumstances.

- Each assignment has a specified due date and time.

- Assignments submitted after the due date will incur a late penalty.

-

The late penalty is calculated using the function: % Penalty = 0.01 (1.4 \(\times\) hours late)2 rounded to the nearest tenth. This results in approximately:

Hours late Penalty 6 0.7% 12 2.8% 24 11.3% 48 45.2% 72 100.0% -

Assignments will not be accepted more than 72 hours (3 days) after the due date.

- The penalty is applied to the assignment's total possible points. For example, if an assignment is worth 100 points and is submitted 36 hours late, the penalty would be approximately 13 points.

- To reward punctuality, each assignment submitted on time will earn a 2% bonus on that assignment's score.

- These on-time bonuses will accumulate throughout the semester and will be added to your final course grade.

Exceptions to this policy will be made on a case-by-case basis for extenuating circumstances. Please communicate with me as early as possible if you anticipate difficulties meeting a deadline.

Submitting your assignments on time can earn you up to a 2% bonus added to your final grade. For each assignment you submit on time, you will receive a proportional percentage of this bonus. For example, if you submit 7 out of 8 assignments on time, you would receive a 1.75% boost to your final grade. This bonus can help improve your final grade, but please note that I will not round up final grades.

Missed exams¶

Attendance and participation in all scheduled exams are crucial for your success in this course. To accommodate unforeseen circumstances while maintaining academic standards, please be aware of the following policy:

Due to time constraints and the difficulty of creating equivalent assessments, makeup exams will only be offered under the same circumstances. Instead, an optional cumulative final exam will be available at the end of the course. This final exam can replace your lowest midterm exam grade if it benefits your overall score. If the final exam score is lower than your existing midterm grades, it will not be counted, ensuring it cannot negatively impact your grade.

If you miss one of the midterm exams, you are highly encouraged to take the cumulative final exam. The score you achieve on the final exam will replace the missed midterm exam grade in calculating your final grade. Missing both midterm exams poses a significant challenge to course completion; in such cases, you must contact me immediately to discuss your situation and explore possible options.

Scale¶

Letter grades for this course will be assigned based on Pitt's recommended scale (shown below).

| Letter grade | Percentage | GPA |

|---|---|---|

| A + | 97.0 - 100.0% | 4.00 |

| A | 93.0 - 96.9% | 4.00 |

| A – | 90.0 - 92.9% | 3.75 |

| B + | 87.0 - 89.9% | 3.25 |

| B | 83.0 - 86.9% | 3.00 |

| B – | 80.0 - 82.9% | 2.75 |

| C + | 77.0 - 79.9% | 2.25 |

| C | 73.0 - 76.9% | 2.20 |

| C – | 70.0 - 72.9% | 1.75 |

| D + | 67.0 - 69.9% | 1.25 |

| D | 63.0 - 66.9% | 1.00 |

| D – | 60.0 - 62.9% | 0.75 |

| F | 0.0 - 59.9% | 0.00 |

Attendance

Attendance and participation¶

While attendance is not mandatory, active participation is strongly encouraged and integral to your course success. Regular class attendance offers several benefits:

- Enhanced understanding of course material through engaging discussions;

- Opportunities for collaborative learning through group activities;

- Practical application of concepts via hands-on exercises.

Please note that while it is possible to achieve the maximum number of points without attending lectures, consistent attendance demonstrates commitment to the course. This commitment may be considered when addressing individual circumstances or requests for flexibility.

Important

Virtual attendance (e.g., via Zoom) is unavailable for this course. All class sessions will be conducted in person.

Policies¶

Generative AI¶

We are in an exciting area of generative AI development with the release of tools such as ChatGPT, DALL-E, GitHub Copilot, Bing Chat, Bard, Copy.ai, and many more. This course will permit these tools' ethical and responsible use except when explicitly noted. For example, you can use these tools as an on-demand tutor by explaining complex topics.

Other ways are undoubtedly possible, but any use should aid—not replace—your learning. You must also be aware of the following aspects of generative AI.

-

AI limitations: While AI programs can be valuable resources, they may produce inaccurate, biased, or incomplete material. Each program has its unique limitations as well.

-

Bias and accuracy: Scrutinizing each aspect of these enormous data sets used to train these products is infeasible. AI will inherit biases and inaccuracies from these sources and human influences in fine-tuning. You must be critical and skeptical of anything generated from these models and verify information from trusted sources.

-

Critical thinking: Understand that AI is a tool, not a replacement for your analysis and critical thinking skills. AI to enhance your understanding and productivity, but remember that your development as a scholar depends on your ability to engage independently with the material.

-

Academic integrity: Plagiarism extends to content generated by AI. Using AI-generated material without proper attribution is a violation of academic integrity policies. Always give credit to AI-generated content and adhere to citation rules.

Furthermore, text from AI tools should be treated as someone else's work—because it is. You should never copy and paste text directly.

-

AI detection: As discussed here, the University Center for Teaching and Learning does not recommend using AI detection tools like turnitin due to high false positive rates. I will not use AI detection tools in any capacity for this course and trust that you will use these tools responsibly when permitted and desired.

Remember that generative AI is helpful when used responsibly. You can ethically benefit from these technological advances by adhering to these guidelines. Embrace this opportunity to expand your skill set and engage thoughtfully with emerging technologies. If you have any questions about AI tool usage, please get in touch with me for clarification and guidance.

Equity, diversity, and inclusion¶

The University of Pittsburgh does not tolerate any form of discrimination, harassment, or retaliation based on disability, race, color, religion, national origin, ancestry, genetic information, marital status, familial status, sex, age, sexual orientation, veteran status or gender identity or other factors as stated in the University's Title IX policy. The University is committed to taking prompt action to end a hostile environment that interferes with the University's mission. For more information about policies, procedures, and practices, visit the Civil Rights & Title IX Compliance web page.

I ask that everyone in the class strive to help ensure that other members of this class can learn in a supportive and respectful environment. If there are instances of the aforementioned issues, please contact the Title IX Coordinator, by calling 412-648-7860 or emailing titleixcoordinator@pitt.edu. Reports can also be filed online. You may also choose to report this to a faculty/staff member; they are required to communicate this to the University's Office of Diversity and Inclusion. If you wish to maintain complete confidentiality, you may also contact the University Counseling Center (412-648-7930).

Academic integrity¶

Students in this course will be expected to comply with the University of Pittsburgh's Policy on Academic Integrity. Any student suspected of violating this obligation during the semester will be required to participate in the procedural process initiated at the instructor level, as outlined in the University Guidelines on Academic Integrity. This may include, but is not limited to, the confiscation of the examination of any individual suspected of violating University Policy. Furthermore, no student may bring unauthorized materials to an exam, including dictionaries and programmable calculators.

To learn more about Academic Integrity, visit the Academic Integrity Guide for an overview. For hands-on practice, complete the Understanding and Avoiding Plagiarism tutorial.

Disability services¶

If you have a disability for which you are or may be requesting an accommodation, you are encouraged to contact both your instructor and Disability Resources and Services (DRS), 140 William Pitt Union, (412) 648-7890, drsrecep@pitt.edu, (412) 228-5347 for P3 ASL users, as early as possible in the term.

DRS will verify your disability and determine reasonable accommodations for this course.

Email communication¶

Upon admittance, each student is issued a University email address (username@pitt.edu).

The University may use this email address for official communication with students.

Students are expected to read emails sent to this account regularly.

Failure to read and react to University communications promptly does not absolve the student from knowing and complying with the content of the communications.

The University provides an email forwarding service that allows students to read their email via other service providers (e.g., Gmail, AOL, Yahoo).

Students who forward their email from their pitt.edu address to another address do so at their own risk.

If email is lost due to forwarding, it does not absolve the student from responding to official communications sent to their University email address.

Religious observances¶

The observance of religious holidays (activities observed by a religious group of which a student is a member) and cultural practices are an important reflection of diversity. As your instructor, I am committed to providing equivalent educational opportunities to students of all belief systems. At the beginning of the semester, you should review the course requirements to identify foreseeable conflicts with assignments, exams, or other required attendance. If possible, please contact me (your course coordinator/s) within the first two weeks of the first class meeting to allow time for us to discuss and make fair and reasonable adjustments to the schedule and/or tasks.

Sexual misconduct, required reporting, and Title IX¶

If you are experiencing sexual assault, sexual harassment, domestic violence, and stalking, please report it to me and I will connect you to University resources to support you.

University faculty and staff members are required to report all instances of sexual misconduct, including harassment and sexual violence to the Office of Civil Rights and Title IX. When a report is made, individuals can expect to be contacted by the Title IX Office with information about support resources and options related to safety, accommodations, process, and policy. I encourage you to use the services and resources that may be most helpful to you.

As your instructor, I am required to report any incidents of sexual misconduct that are directly reported to me. You can also report directly to Office of Civil Rights and Title IX: 412-648-7860 (M-F; 8:30am-5:00pm) or via the Pitt Concern Connection at: Make A Report.

An important exception to the reporting requirement exists for academic work. Disclosures about sexual misconduct that are shared as a relevant part of an academic project, classroom discussion, or course assignment, are not required to be disclosed to the University's Title IX office.

If you wish to make a confidential report, Pitt encourages you to reach out to these resources:

- The University Counseling Center: 412-648-7930 (8:30 A.M. TO 5 P.M. M-F) and 412-648-7856 (AFTER BUSINESS HOURS)

- Pittsburgh Action Against Rape (community resource): 1-866-363-7273 (24/7)

If you have an immediate safety concern, please contact the University of Pittsburgh Police, 412-624-2121

Any form of sexual harassment or violence will not be excused or tolerated at the University of Pittsburgh.

For additional information, please visit the full syllabus statement on the Office of Diversity, Equity, and Inclusion webpage.

Statement on classroom recording¶

To ensure the free and open discussion of ideas, students may not record classroom lectures, discussions and/or activities without the advance written permission of the instructor, and any such recording properly approved in advance can be used solely for the student's private use.

Ended: Syllabus

Lectures ↵

Lectures¶

We have 22 lectures spanned across three modules:

Bioinformatics ↵

Bioinformatics¶

In this module, we cover experimental and computational foundations of genomics and transcriptomics.

Lecture 01

Computational biology course overview and foundations

Date: Aug 27, 2024

This inaugural lecture serves as a comprehensive introduction to the field of computational biology and establishes the framework for the course.

Learning objectives¶

What you should be able to do after today's lecture:

- Describe the course structure, expectations, and available resources for success.

- Define computational biology and explain its interdisciplinary nature.

- Identify key applications and recent advancements.

- Understand the balance between applications and development.

- Identify potential career paths and educational opportunities.

Presentation¶

Download: biosc1540-l01.pdf

Lecture 02

DNA sequencing

Date: August 29, 2024

The session traces the evolution of DNA sequencing technologies, commencing with the seminal Sanger method and progressing to state-of-the-art Illumina and Nanopore platforms. Through a comparative analysis of these technologies, we will elucidate the rapid advancements that have catalyzed genomic research. The discourse will encompass a critical evaluation of the strengths and limitations inherent to each method, offering insights into their optimal applications in contemporary research contexts. The lecture culminates with an in-depth overview of a typical DNA sequencing workflow, providing students with a pragmatic understanding of genomic data generation.

Learning objectives¶

What you should be able to do after today's lecture:

- Analyze antibiotic resistance challenges and evaluate genomics' role in mitigating them.

- Construct a general workflow intrinsic to DNA sequencing experiments.

- Delineate the core principles underlying Sanger sequencing.

- Conduct a comparative analysis of Illumina sequencing vis-à-vis Sanger sequencing.

- Explicate the fundamental principles governing Nanopore sequencing technology.

Readings¶

Relevant content for today's lecture.

- Sequencing background

- DNA sequencing

- DNA sample preparation

- PCR

- Sanger sequencing

- Illumina sequencing

- Nanopore sequencing

Presentation¶

Download: biosc1540-l02.pdf

Lecture 03: Quality control ↵

Lecture 03

Quality control

Date: Sep 3, 2024

This session focuses on the critical steps of quality control and preprocessing in genomic data analysis. We will explore the fundamentals of sequencing data formats and delve into essential techniques for assessing and improving data quality.

Learning objectives¶

What you should be able to do after today's lecture:

- Explain the basic concepts and importance of genome assembly.

- Interpret FASTA and FASTQ file formats and their role in storing sequences.

- Perform and interpret quality control on reads using FastQC.

- Identify common quality issues in sequencing data and explain their impacts.

- Describe the process and importance of sequence trimming and filtering.

Readings¶

Relevant content for today's lecture.

- Genome assembly

- Assembly concepts and nested content

- FASTA files

- FASTQ files

- FastQC and nested content

Presentation¶

Download: biosc1540-l03.pdf

Activities ↵

Ended: Activities

Ended: Lecture 03: Quality control

Lecture 04

De novo genome assembly

Date: Sep 5, 2024

This lecture aims to provide a thorough understanding of de novo assembly techniques, their applications, and their crucial role in advancing genomic research.

Learning objectives¶

What you should be able to do after today's lecture:

- Explain the fundamental challenge of reconstructing a complete genome.

- Describe and apply the principles of the greedy algorithm.

- Understand and construct de Bruijn graphs.

Readings¶

Relevant content for today's lecture.

- Greedy algorithm

- de Bruijn

- JHU

- JHU2

- Why are de Bruijn graphs useful for genome assembly?

- Read mapping on de Bruijn graphs

- Galaxy

- Data science for HTS

- SPAdes assembler

- Online graph builder. Note that they use knode values.

Presentation¶

Download: biosc1540-l04.pdf

Lecture 05

Gene annotation

Date: Sep 10, 2024

This lecture covers the fundamental concepts and techniques of gene annotation, a critical process in genomics for identifying and characterizing genes within DNA sequences. We'll explore various computational methods used in gene annotation, their applications, and challenges.

Learning Objectives¶

By the end of this lecture, you should be able to:

- Explain the graph traversal and contig extraction process in genome assemblers.

- Understand key output files and quality metrics of genome assembly results.

- Define gene annotation and describe its key components.

- Outline the main computational methods used in gene prediction and annotation.

- Analyze and interpret basic gene annotation data and outputs.

Readings¶

Relevant content for today's lecture.

- None! Just the lecture.

Presentation¶

Download: biosc1540-l05.pdf

Lecture 06

Sequence alignment

Date: Sep 12, 2024

In this lecture, we will explore sequence alignment, a fundamental technique in computational biology. We will cover the basics of alignment scoring, key algorithms for both pairwise and multiple sequence alignments, and learn how to interpret alignment results.

Learning objectives¶

What you should be able to do after today's lecture:

- Define sequence alignment and explain its importance in bioinformatics.

- Describe the basic principles of scoring systems in sequence alignment.

- Explain the principles and steps of global alignment using the Needleman-Wunsch algorithm.

- Describe the concept and procedure of local alignment using the Smith-Waterman algorithm.

- Introduce the concept of multiple sequence alignment, including its importance and challenges.

Readings¶

Relevant content for today's lecture.

- Global alignment

- Local alignment

- Online tools for Needleman-Wunsch and Smith-Waterman.

Presentation¶

Download: biosc1540-l06.pdf

Lecture 07

Introduction to transcriptomics

Date: Sep 17, 2024

By the end of this lecture, you'll have a comprehensive understanding of transcriptomics' power in unraveling the intricacies of gene expression and its broad applications in biological research.

Learning objectives¶

What you should be able to do after today's lecture:

- Define transcriptomics and explain its role in understanding gene expression patterns.

- Discuss emerging trends in transcriptomics.

- Compare and contrast transcriptomics and genomics.

- Explain the principles of RNA-seq technology and its advantages over previous methods.

- Outline the computational pipeline for RNA-seq data analysis.

Readings¶

Relevant content for today's lecture.

- None! Just the presentation.

Presentation¶

Download: biosc1540-l07.pdf

Lecture 08

Read mapping

Date: Sep 19, 2024

We'll explore the challenges of aligning millions of short reads to a reference genome and discuss various algorithms and data structures that make this process efficient. The session will focus on the Burrows-Wheeler Transform (BWT) and the FM-index, two key concepts that revolutionized read alignment by enabling fast, memory-efficient sequence searching. We'll examine how these techniques are implemented in popular alignment tools and compare their performance characteristics.

Learning objectives¶

What you should be able to do after today's lecture:

- Describe the challenges of aligning short reads to a large reference genome.

- Compare read alignment algorithms, including hash-based and suffix tree-based approaches.

- Explain the basic principles of the Burrows-Wheeler Transform (BWT) for sequence alignment.

Readings¶

Relevant content for today's lecture.

- Burrows-Wheeler transform

- Suffix trees

- Suffix arrays

Presentation¶

Download: biosc1540-l08.pdf

Lecture 09

Gene expression quantification

Date: Sep 24, 2024

We'll explore how raw sequencing reads are transformed into meaningful measures of gene activity, navigating the complexities of multi-mapped reads and isoform variations. The session will compare various quantification metrics, from traditional RPKM to more recent innovations like TPM, highlighting their strengths and limitations. We'll examine cutting-edge tools for transcript-level quantification and discuss the crucial role of normalization in generating comparable expression data across samples. Through practical examples, students will learn to interpret gene expression results, bridging the gap between computational output and biological insight.

Learning objectives¶

What you should be able to do after today's lecture:

- Discuss the importance of normalization and quantification in RNA-seq data analysis.

- Explain the relevance of pseudoalignment instead of read mapping.

- Understand the purpose of Salmon's generative model.

- Describe how salmon handles experimental biases in transcriptomics data.

- Communicate the principles of inference in Salmon.

Readings¶

Relevant content for today's lecture.

Presentation¶

Download: biosc1540-l09.pdf

Lecture 10

Differential gene expression

Date: Sep 26, 2024

This lecture explores differential gene expression analysis, a powerful approach for uncovering the molecular basis of biological phenomena.

Learning objectives¶

What you should be able to do after today's lecture:

- Define differential gene expression and explain its importance.

- Describe the statistical principles underlying differential expression analysis.

- Outline the steps in a typical differential expression analysis workflow.

- Explain key concepts such as fold change, p-value, and false discovery rate.

- Interpret common visualizations used in differential expression analysis.

Readings¶

Relevant content for today's lecture.

- None! Just the slides.

Presentation¶

Download: biosc1540-l10.pdf

Ended: Bioinformatics

Computational structural biology ↵

Computational structural biology¶

In this module, we cover computational structural biology.

Lecture 11

Structural biology

Date: Oct 8, 2024

This lecture introduces the field of computational structural biology and its interplay with experimental methods. We'll explore how computational approaches complement and enhance traditional structural biology techniques, providing insights into protein structure, function, and dynamics. The session will cover key experimental methods like X-ray crystallography and cryo-EM, highlighting their strengths and limitations.

Learning objectives¶

What you should be able to do after today's lecture:

- Categorize atomic interactions and their importance.

- Explain what is structural biology and why is it important.

- Communicate the basics of X-ray crystallization.

- Find and analyze protein structures in the Protein Data Bank (PDB).

- Compare and contrast Cryo-EM to X-ray crystallization.

- Understand the experimental challenges of disorder.

- Know why protein structure prediction is helpful.

Readings¶

Relevant content for today's lecture.

- None! Just the lecture.

Presentation¶

Download: biosc1540-l11.pdf

Lecture 12

Protein structure prediction

Date: Oct 10, 2024

We'll explore how amino acid sequences are transformed into three-dimensional structures through computational methods. The session will cover various approaches, from traditional homology modeling to cutting-edge deep learning techniques like AlphaFold. We'll examine the principles underlying these methods, their applications, and their impact on biological research.

Learning objectives¶

What you should be able to do after today's lecture:

- Why are we learning about protein structure prediction?

- Identify what makes structure prediction challenging.

- Explain homology modeling.

- Know when to use threading instead of homology modeling.

- Interpret a contact map for protein structures.

- Comprehend how coevolution provides structural insights.

- Explain why ML models are dominate protein structure prediction.

Readings¶

Relevant content for today's lecture.

- None! Just the lecture.

Presentation¶

Download: biosc1540-l12.pdf

Lecture 13

Molecular simulation principles

Date: Oct 17, 2024

This lecture introduces the fundamental principles of molecular dynamics (MD) simulations. We'll explore the theoretical foundations of MD, including force fields and integration algorithms. The session will cover the basic concepts needed to understand how MD simulations provide a dynamic view of molecular systems, complementing static structural data.

Learning objectives¶

What you should be able to do after today's lecture:

- Understand the importance of molecular dynamics (MD) simulations for proteins.

- Identify the validity of the classical approximation.

- Discuss the concept of equations of motion in MD simulations.

- Explain the role of integration algorithms in MD simulations.

- Describe the components of a molecular mechanics force field.

- Understand noncovalent contributions to force fields.

- Identify data for force field parameterization

Readings¶

Relevant content for today's lecture.

- None! Just the slides (and Google).

Presentation¶

Download: biosc1540-l13.pdf

Lecture 14

Molecular system representations

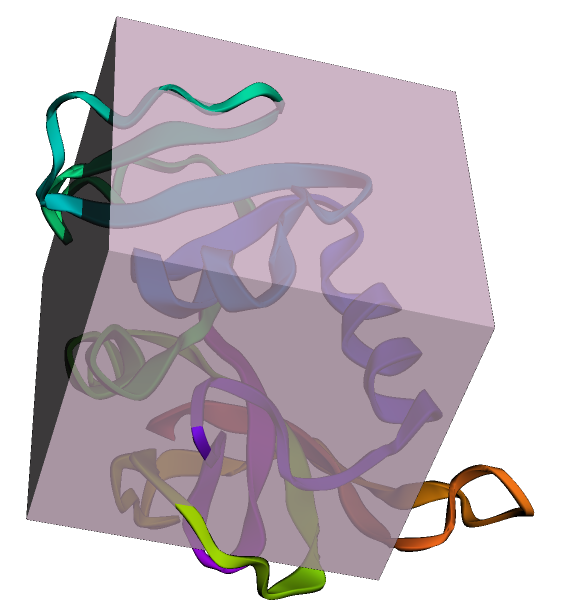

Date: Oct 22, 2024

Building on the foundations from the previous lecture, this session focuses on the practical aspects of setting up and running MD simulations. We'll walk through the steps involved in preparing a system for simulation.

Note

I decided to go over MD relaxations a second time for L15 and moved those slides there.

Learning objectives¶

What you should be able to do after today's lecture:

- Explain why DHFR is a promising drug target.

- Select and prepare a protein structure for molecular simulations.

- Explain the importance of approximating molecular environments.

- Describe periodic boundary conditions and their role in MD simulations.

- Explain the role of force field selection and topology generation.

- Outline the process of energy minimization and its significance.

Readings¶

Relevant content for today's lecture if you are interested.

Presentation¶

Download: biosc1540-l14.pdf

Lecture 15

Ensembles and atomistic insights

Date: Oct 24, 2024

This final lecture in the MD series focuses on the analysis and interpretation of MD simulation data. We'll explore common analysis techniques and how to extract meaningful biological insights from simulation trajectories.

Learning objectives¶

After today, you should better understand:

- Molecular ensembles and their relevance.

- Maintaining thermodynamic equilibrium.

- Relaxation and production MD simulations.

- RMSD and RMSF as conformational changes and flexibility metrics.

- Relationship between probability and energy in simulations.

Readings¶

Relevant content for today's lecture.

- Any scientific literature.

Presentation¶

Download: biosc1540-l15.pdf

Lecture 16

Structure-based drug design

Date: Oct 29, 2024

What you should be able to do after today's lecture:

- Drug development pipeline.

- Role of structure-based drug design.

- Thermodynamics of binding.

- Enthalpic contributions to binding.

- Entropic contributions to binding

- Alchemical free energy simulations

Presentation¶

Download: biosc1540-l16.pdf

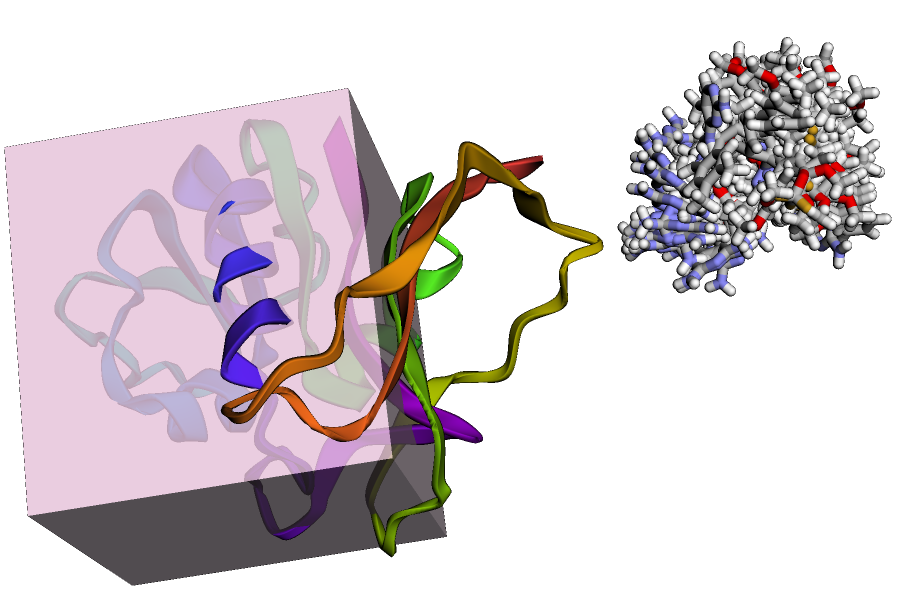

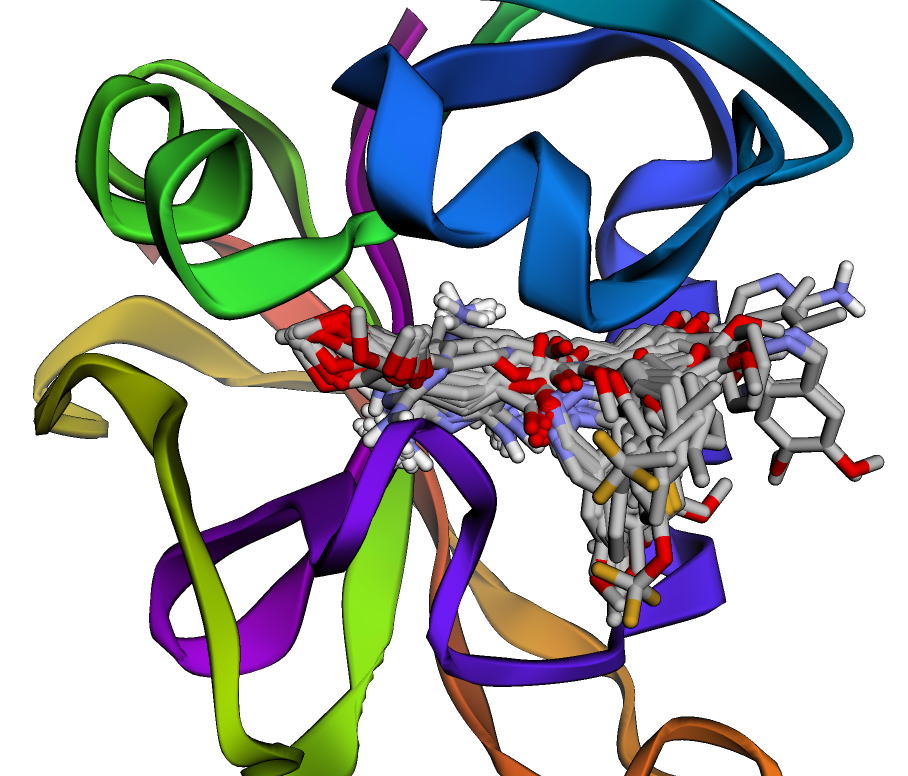

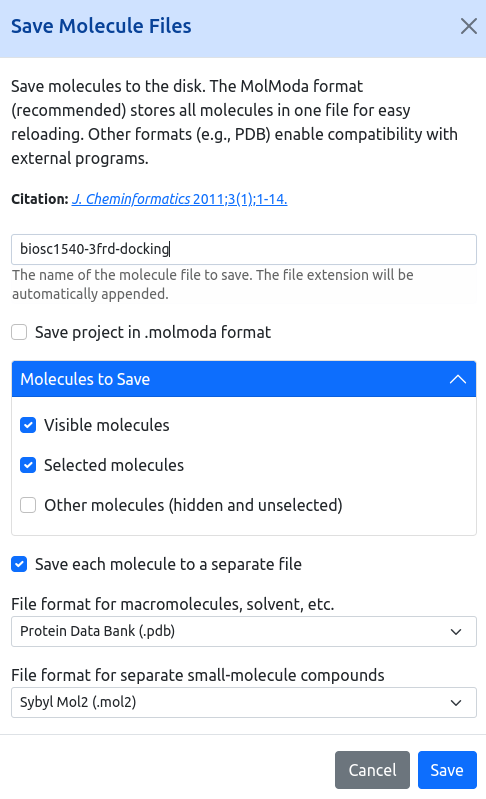

Lecture 17

Docking and virtual screening

Date: Oct 31, 2024

This lecture delves into molecular docking and virtual screening, essential techniques in computational drug discovery. The session will cover different docking algorithms, scoring functions, and strategies for improving virtual screening efficiency and accuracy.

Learning objectives¶

After today, you should have a better understanding of:

- Practical limitations of alchemical simulations.

- Value of data-driven approaches for docking.

- Identifying relevant protein conformations.

- Detecting binding pockets.

- Ligand pose optimization.

- Scoring functions as data-driven predictors.

- Interpretation of docking results.

Presentation¶

Download: biosc1540-l17.pdf

Lecture 18

Ligand-based drug design

Date: Nov 7, 2024

After today, you should have a better understanding of:

- The basic principles of ligand-based drug design and how it differs from structure-based approaches.

- How descriptors and fingerprints evaluate molecular similarity.

- How QSAR models predict biological activity based on molecular structure.

- The role of pharmacophore modeling in identifying essential molecular features for activity.

Presentation¶

Download: biosc1540-l18.pdf

Ended: Computational structural biology

Scientific python ↵

Scientific python¶

These are optional lectures will teach you the fundamentals of scientific Python. Not attending these lectures will not impact your grade.

Lecture 19

Python basics

Date: Nov 19, 2024

Learning Objectives¶

After today, you should have a better understanding of:

- Setting up and navigating a Colab notebook to write and execute Python code.

- Writing simple print statements to display output.

- Adding inline and block comments to make code more understandable.

- Using Python to perform basic arithmetic calculations like addition, subtraction, multiplication, division, and exponentiation.

- Defining and assigning variables.

- Recognizing and using basic Python data types such as integers, floats, strings, and booleans.

- Implementing decision-making logic using

if,elif, andelsestatements. - Using lists, tuples, and dictionaries to store and manipulate grouped data.

- Writing reusable code by defining functions with parameters and return values.

- Iterating over sequences using

forloops. - Writing

whileloops to repeat actions based on conditions.

Materials¶

Additional resources¶

- Here are some additional resources for learning Python: kaggle learn, Software Carpentry, learnpython.org, and Google's Python Class

Lecture 20

Arrays

Date: Nov 21, 2024

Learning objectives¶

What you should be able to do after today's lecture:

- Learn how to load, slice, and manipulate multi-dimensional data arrays.

- Calculate descriptive statistics such as mean, median, and standard deviation.

- Generate scatter plots to visualize relationships between variables.

- Use heat maps to explore gene expression patterns across patients.

- Compare gene expression levels between survivors and non-survivors.

- Identify potential genes of interest using a volcano plot.

Materials¶

Additional resources¶

- Here are some additional resources for learning Python: python.crumblearn.org/data/numpy/

Lecture 21: Predictive modeling ↵

Lecture 21

Predictive modeling

Date: Dec 3, 2024

Learning objectives¶

After today, you should have a better understanding of:

- Define linear regression, its limitations, and objective function.

- Describe the purpose of loss functions in regression.

- Understand the conversion of data from a DataFrame to NumPy arrays.

- Develop hands-on programming skills for implementing regression in Python.

- Interpret the coefficients obtained through optimization and evaluate the model's performance.

- Visualize linear regression models and their fit to data.

- Discuss practical considerations for model interpretation, assumptions, and limitations.

Some relevant code snippets.

import numpy as np

import pandas as pd

from rdkit import Chem

from rdkit.Chem import AllChem

from rdkit.Chem import Draw

import py3Dmol

def show_mol(smi, style="stick"):

mol = Chem.MolFromSmiles(smi)

mol = Chem.AddHs(mol)

AllChem.EmbedMolecule(mol)

AllChem.MMFFOptimizeMolecule(mol, maxIters=200)

mblock = Chem.MolToMolBlock(mol)

view = py3Dmol.view(width=500, height=500)

view.addModel(mblock, "mol")

view.setStyle({style: {}})

view.zoomTo()

view.show()

Predictive Modeling - pKa edition¶

Today, we delve into the application of regression analysis in chemistry and data science. Our dataset comprises p$K_\text{a}$ values and corresponding molecular descriptors, offering a quantitative approach to understanding molecular properties.

pKa¶

The p$K_\text{a}$ measures a substance's acidity or basicity, particularly in chemistry. It is the negative logarithm (base 10) of the acid dissociation constant ($K_\text{a}$) of a solution. The p$K_\text{a}$ value helps quantify the strength of an acid in a solution.

The expression for the acid dissociation constant ($K_\text{a}$), from which p$K_\text{a}$ is derived, is given by the following chemical equilibrium equation for a generic acid (HA) in water:

$$ \text{HA} \rightleftharpoons \text{H}^+ + \text{A}^- $$

The equilibrium constant ($K_\text{a}$) for this reaction is defined as the ratio of the concentrations of the dissociated ions ($\text{H}^+$ and $\text{A}^-$) to the undissociated acid ($\text{HA}$):

$$ K_a = \frac{[\text{H}^+][\text{A}^-]}{[\text{HA}]} $$

Taking the negative logarithm (base 10) of both sides of the equation gives the expression for pKa:

$$ \text{p}K_a = -\log_{10}(K_a) $$

So, in summary, the p$K_\text{a}$ is calculated by taking the negative logarithm of the acid dissociation constant ($K_\text{a}$) for a given acid. A lower p$K_\text{a}$ indicates a stronger acid.

In simpler terms:

- A lower p$K_\text{a}$ indicates a stronger acid because it means the acid is more likely to donate a proton (H+) in a chemical reaction.

- A higher p$K_\text{a}$ indicates a weaker acid as it is less likely to donate a proton.

The p$K_\text{a}$ is a crucial parameter in understanding the behavior of acids and bases in various chemical reactions. It is commonly used in fields such as medicinal chemistry, biochemistry, and environmental science to describe and predict the behavior of molecules in solution.

Exploring the dataset¶

Now, let's shift our focus to a practical application of our theoretical knowledge.

I found this dataset that contains a bunch of high-quality experimental measurements of pKas. It contains a bunch of information and other aspects that makes regression a bit of a nightmare; thus, I did some cleaning of the data and computed some molecular features (i.e., descriptors) that we can use. Before delving into regression analysis, it is essential to conduct a systematic review of the dataset. This preliminary examination will provide us with the necessary foundation to understand the quantitative relationships between molecular features and acidity. Let's now proceed with a methodical investigation of the empirical data, setting the stage for our subsequent analytical endeavors.

Loading¶

Using the Pandas library, read the CSV file into a DataFrame. Use the variable you defined in the previous step.

import numpy as np

import pandas as pd

# @title

from rdkit import Chem

from rdkit.Chem import AllChem

from rdkit.Chem import Draw

import py3Dmol

def show_mol(smi, style="stick"):

mol = Chem.MolFromSmiles(smi)

mol = Chem.AddHs(mol)

AllChem.EmbedMolecule(mol)

AllChem.MMFFOptimizeMolecule(mol, maxIters=200)

mblock = Chem.MolToMolBlock(mol)

view = py3Dmol.view(width=500, height=500)

view.addModel(mblock, "mol")

view.setStyle({style: {}})

view.zoomTo()

view.show()

CSV_PATH = "https://github.com/oasci-courses/pitt-biosc1540-2024f/raw/refs/heads/main/content/lectures/21/smiles-pka-desc.csv"

df = pd.read_csv(CSV_PATH)

Ended: Lecture 21: Predictive modeling

Lecture 22

Project work

Date: Dec 5, 2024

For today's lecture, we will have an open work session dedicated to your ongoing projects. This is a great opportunity to focus on your work, collaborate with classmates, and address any challenges you might be facing. Additionally, I will be available to provide help with PyMol, a powerful molecular visualization tool that can enhance your project presentations and analyses.

Lecture 23

Project work

Date: Dec 10, 2024

For today's lecture, we will have an open work session dedicated to your ongoing projects. This is a great opportunity to focus on your work, collaborate with classmates, and address any challenges you might be facing. Additionally, I will be available to provide help with PyMol, a powerful molecular visualization tool that can enhance your project presentations and analyses.

Ended: Scientific python

Ended: Lectures

Assessments ↵

Assessments¶

Welcome to the assessments page for BIOSC 1540 - Computational Biology. This page provides an overview of how your performance in the course will be evaluated.

Distribution¶

Your final grade will be calculated based on the following distribution:

- Exams (35%)

- Project (30%)

- Assignments (35%)

Grading Scale¶

Letter grades will be assigned based on the following scale:

| Letter grade | Percentage | GPA |

|---|---|---|

| A + | 97.0 - 100.0% | 4.00 |

| A | 93.0 - 96.9% | 4.00 |

| A – | 90.0 - 92.9% | 3.75 |

| B + | 87.0 - 89.9% | 3.25 |

| B | 83.0 - 86.9% | 3.00 |

| B – | 80.0 - 82.9% | 2.75 |

| C + | 77.0 - 79.9% | 2.25 |

| C | 73.0 - 76.9% | 2.20 |

| C – | 70.0 - 72.9% | 1.75 |

| D + | 67.0 - 69.9% | 1.25 |

| D | 63.0 - 66.9% | 1.00 |

| D – | 60.0 - 62.9% | 0.75 |

| F | 0.0 - 59.9% | 0.00 |

For more detailed information on assessments, please consult the full course syllabus or contact the instructor.

Assignments ↵

Assignments¶

We will have seven homework assignments throughout the semester.

A01

Please submit your answers as a PDF to gradescope.

Q01¶

Points: 6

Why is the quality of sequencing data typically lower at the end of a read in Sanger sequencing?

Solution

The quality of sequencing data in Sanger sequencing tends to decrease towards the end of a read due to several factors related to the nature of the sequencing process. This phenomenon is primarily attributed to the decreasing population of longer DNA fragments. Here's a detailed explanation:

- Probability of ddNTP incorporation:

- To obtain longer fragments, we need to avoid incorporating a dideoxynucleotide (ddNTP) until the very end of the sequence.

- As the length of the fragment increases, the probability of not incorporating a ddNTP at any previous position decreases.

- This results in fewer long fragments compared to shorter ones, leading to weaker signals for longer sequences.

- Concentration ratio of dNTPs to ddNTPs:

- While we maintain a low concentration of ddNTPs relative to dNTPs to promote the synthesis of longer fragments, the cumulative probability of ddNTP incorporation still increases with length.

- This leads to a gradual decrease in the population of fragments as their length grows.

- Mass and mobility differences:

- As DNA fragments increase in length, the relative mass difference between fragments of consecutive lengths decreases.

- For example, the mass difference between a 99-mer and a 100-mer is proportionally smaller than the difference between a 9-mer and a 10-mer.

- This results in poorer separation of longer fragments during electrophoresis, contributing to decreased resolution and quality of signals for longer reads.

- Signal-to-noise ratio:

- Due to the factors mentioned above, the signal intensity for longer fragments is lower.

- This leads to a decreased signal-to-noise ratio for longer reads, making it more difficult to accurately determine the base calls at the end of a sequence.

Some students might suggest that the depletion of ddNTPs is responsible for the lower quality of longer reads. However, this is not correct. It's important to clarify that:

- The reaction mixture contains an excess of both dNTPs and ddNTPs.

- The concentrations of these nucleotides are not significantly depleted during the sequencing reaction.

- The relative concentrations of dNTPs and ddNTPs remain essentially constant throughout the process.

Q02¶

Points: 6

What is the purpose of adding adapters to DNA fragments in Illumina sequencing?

Solution

Adapters are short oligonucleotide sequences added to DNA fragments during Illumina sequencing library preparation.

Key purpose

Adapters contain sequences complementary to oligonucleotides on the Illumina flow cell surface. This allows DNA fragments to bind to the flow cell and form clusters.

They serve several other crucial purposes.

- Priming for sequencing: Adapters include primer binding sites for both forward and reverse sequencing reactions.

- Index/barcode sequences: Adapters often contain unique index sequences that allow for multiplexing—running multiple samples in a single sequencing lane.

- Bridge amplification: Adapter sequences facilitate bridge amplification, which generates clonal clusters of each DNA fragment on the flow cell surface.

- Sequencing initiation: The adapter sequences provide a known starting point for the sequencing reaction.

Q03¶

Points: 4

Compare and contrast the principles behind Sanger sequencing and Illumina sequencing. How does each method overcome the challenge of determining the order of nucleotides in a DNA strand? In your answer, consider the strengths and limitations of each approach.

Solution

Sanger Sequencing:

- Principle: Based on the selective incorporation of chain-terminating dideoxynucleotides (ddNTPs) by DNA polymerase during in vitro DNA replication.

- Method:

- Uses a single-stranded DNA template, a DNA primer, DNA polymerase, normal deoxynucleotides (dNTPs), and modified nucleotides (ddNTPs) that terminate DNA strand elongation.

- Resulting DNA fragments are separated by size using capillary electrophoresis.

- Determining sequence:

- Nucleotide order is determined by the length of DNA fragments produced when chain termination occurs.

- The fragments are separated by size, and the terminal ddNTP of each fragment indicates the nucleotide at that position.

- The sequence is read from the shortest to the longest fragment.

- Strengths:

- High accuracy for long reads (up to ~900 base pairs).

- Good for sequencing specific genes or DNA regions.

- Still considered the "gold standard" for validation of other sequencing methods.

- Limitations:

- Low throughput compared to next-generation methods.

- Higher cost per base compared to Illumina sequencing.

- Difficulty in sequencing low-complexity regions or regions with extreme GC content.

Illumina Sequencing:

- Principle: Uses sequencing by synthesis (SBS) technology, where fluorescently labeled nucleotides are detected as they are incorporated into growing DNA strands.

- Method:

- DNA is fragmented and adapters are ligated to both ends of the fragments.

- Fragments are amplified on a flow cell surface, creating clusters.

- Sequencing occurs in cycles, where a single labeled nucleotide is added, detected, and then the label is cleaved off before the next cycle.

- Determining sequence:

- Nucleotide order is determined by detecting the specific fluorescent signal emitted when each base is incorporated.

- Millions of clusters are sequenced simultaneously, with each cluster representing a single DNA fragment.

- The sequence of each cluster is built up one base at a time over multiple cycles.

- Strengths:

- Very high throughput, capable of sequencing millions of fragments simultaneously.

- Cost-effective for large-scale sequencing projects.

- Highly accurate due to the depth of coverage (each base is sequenced multiple times).

- Versatile, can be used for whole genome sequencing, transcriptomics, and more.

- Limitations:

- Shorter read lengths (typically 150-300 base pairs) compared to Sanger sequencing.

- Higher error rates in homopolymer regions.

- More complex data analysis required due to the large volume of data generated.

Crucial Differences:

- Scale and Throughput: Illumina sequencing is massively parallel, allowing for much higher throughput than Sanger sequencing.

- Read Length: Sanger produces longer individual reads compared to Illumina.

- Methodology: Sanger uses chain termination, while Illumina uses sequencing by synthesis.

- Application: Sanger is better for targeted sequencing of specific genes, while Illumina is preferred for whole genome or exome sequencing.

Subtle Differences:

- Sample Preparation: Illumina requires more complex library preparation, including adapter ligation and amplification.

- Error Profiles: Each method has different types of sequencing errors. Sanger tends to have higher error rates at the beginning and end of reads, while Illumina can struggle with GC-rich regions.

- Data Analysis: Illumina sequencing requires more sophisticated bioinformatics tools for data processing and analysis.

- Cost Structure: While Illumina is more cost-effective for large-scale projects, Sanger can be more economical for sequencing small numbers of samples or specific regions.

- Sensitivity: Illumina can detect low-frequency variants more easily due to its high depth of coverage, which is more challenging with Sanger sequencing.

Q04¶

Points: 5

In Sanger sequencing, the ratio of dideoxynucleotides (ddNTPs) to deoxynucleotides (dNTPs) is crucial for successful sequencing. Explain why this ratio is essential and predict what might happen if:

- The concentration of ddNTPs is too high;

- The concentration of ddNTPs is too low.

Solution

The optimal ratio of ddNTPs to dNTPs in Sanger sequencing is critical for generating a balanced distribution of fragment lengths. This ratio is essential for the following reasons:

- Fragment Distribution: The ratio ensures that chain termination occurs randomly at different positions along the template DNA, producing a set of fragments that differ in length by one nucleotide.

- Read Length: It allows for the generation of fragments covering the entire sequence of interest, typically up to 900-1000 base pairs.

If the concentration of ddNTPs is too high:

- Short Fragments: There would be an overabundance of short DNA fragments due to frequent early termination of DNA synthesis.

- Loss of Long Reads: Longer fragments would be underrepresented or absent, leading to incomplete sequence information for the latter part of the template.

- Reduced Overall Signal: The signal intensity would decrease rapidly along the length of the sequence, making it difficult to read bases accurately beyond the first 100-200 nucleotides.

If the concentration of ddNTPs is too low:

- Long Fragments: There would be an overabundance of long DNA fragments due to infrequent termination of DNA synthesis.

- Loss of Short Reads: Shorter fragments would be underrepresented, leading to poor sequence quality at the beginning of the read.

- Weak Signal for Short Fragments: The signal intensity for the first part of the sequence would be very low, making it difficult to accurately determine the initial bases.

Q05¶

Points: 4

Illumina sequencing uses "bridge amplification" to create clusters of identical DNA fragments. Describe how this process works and explain why it's necessary for the Illumina sequencing method. How does this compare to the amplification process in Sanger sequencing?

Solution

Bridge amplification is a crucial step in Illumina sequencing that creates clusters of identical DNA fragments on the surface of a flow cell. The process works as follows:

- Flow Cell Preparation: The flow cell surface is coated with two types of oligonucleotide adapters.

- DNA Fragment Attachment: Single-stranded DNA fragments with adapters at both ends are added to the flow cell. These fragments bind randomly to the complementary adapters on the surface.

- Bridge Formation: The free end of a bound DNA fragment bends over and hybridizes to a nearby complementary adapter on the surface, forming a "bridge."

- Amplification: DNA polymerase creates a complementary strand, forming a double-stranded bridge.

- Denaturation: The double-stranded bridge is denatured, resulting in two single-stranded copies of the molecule tethered to the flow cell surface.

- Repeated Cycles: Steps 3-5 are repeated multiple times, with each fragment amplifying into a distinct cluster.

- Reverse Strand Removal: One strand (usually the reverse strand) is cleaved and washed away, leaving clusters of single-stranded, identical DNA fragments.

Bridge amplification is necessary for Illumina sequencing for several reasons:

- Signal Amplification: It creates thousands of identical copies of each DNA fragment, significantly amplifying the signal for detection during sequencing.

- Clonal Clusters: Each cluster represents a single original DNA fragment, ensuring that the sequencing signal comes from identical molecules.

- Parallelization: Millions of clusters can be generated on a single flow cell, allowing for massive parallelization of sequencing reactions.

- Improved Accuracy: The clonal nature of each cluster helps to reduce sequencing errors by providing multiple identical copies for each base call.

- High Throughput: The dense arrangement of clusters on the flow cell surface enables high-throughput sequencing.

The amplification process in Illumina sequencing differs significantly from that used in Sanger sequencing:

- Method:

- Illumina: Uses solid-phase bridge amplification on a flow cell surface.

- Sanger: Typically uses solution-phase PCR or cloning in bacterial vectors.

- Scale:

- Illumina: Massively parallel, creating millions of clusters simultaneously.

- Sanger: Limited to amplifying one DNA fragment at a time.

- Product:

- Illumina: Generates clonal clusters of single-stranded DNA.

- Sanger: Produces a population of double-stranded DNA fragments.

- Locality:

- Illumina: Amplification occurs in a fixed location on the flow cell.

- Sanger: Amplification occurs in solution.

Q06¶

Points: 4

You are designing primers for a Sanger sequencing experiment. The region you want to sequence contains an important single nucleotide polymorphism (SNP) at position 90 from the start of your sequence of interest. Given what you know about the typical quality of Sanger sequencing reads at different positions, where would you design your sequencing primer to bind? Explain your reasoning.

Solution

Before designing the primer, it's crucial to understand the typical quality pattern of Sanger sequencing reads:

- Start of the read (first ~15-35 bases): Often low quality due to primer binding and initial reaction instability.

- Middle section (~35-700 bases): Highest quality, with accurate base calls.

- End of the read (beyond ~700-900 bases): Decreasing quality due to signal decay and increased noise.

Given that the SNP of interest is at position 90 from the start of the sequence, the ideal primer design would place this SNP within the high-quality middle section of the sequencing read. Specifically, design the primer to bind approximately 40-50 bases upstream of the SNP.

Rationale:

- This placement allows for the initial low-quality bases (first 15-35) to be read before reaching the SNP.

- It positions the SNP at around base 130-140 in the sequencing read (40-50 bases for primer + 90 bases to SNP).

- This location falls well within the high-quality middle section of a typical Sanger sequencing read.

Benefits of this design:

- Ensures high-quality base calls around the SNP position.

- Provides sufficient context before and after the SNP for accurate analysis.

- Allows for potential upstream sequence verification if needed.

Q07¶

Points: 2

You are conducting a Sanger sequencing experiment on a gene of interest isolated from a wild plant population. Upon examining the chromatogram, you notice that multiple peaks frequently overlap at several positions, making base calling difficult. This pattern persists even when you repeat the sequencing with newly designed primers. Further investigation reveals that the plant samples were collected from an area known for its high biodiversity and the presence of closely related species.

What is one possible molecular or genomic explanation for the overlapping peaks observed in your chromatogram? Describe how it could lead to the pattern in the sequencing results.

Clarification

In this scenario, we are working with plant samples collected from a wild population in an area known for high biodiversity. This means we're not dealing with lab-grown, genetically identical plants, but rather with plants that have natural genetic variation.

When we say we're sequencing "a gene of interest isolated from a wild plant population," we're typically working with DNA extracted from a single plant specimen. However, this single specimen can contain genetic material from multiple closely related organisms due to various biological phenomena.

Sanger sequencing typically starts with many copies of a specific DNA region (our "gene of interest"). These copies are created through PCR amplification of the extracted DNA. The sequencing reaction is then performed on this amplified DNA.

The chromatogram represents the sequencing results from all the DNA molecules present in your sample. If there are multiple versions of the gene present, they will all be sequenced simultaneously, resulting in overlapping peaks.

Solution

The observation of multiple overlapping peaks at several positions in a Sanger sequencing chromatogram, persisting even with newly designed primers, can be attributed to various molecular and genomic phenomena. Given the context of a wild plant population in an area of high biodiversity, several plausible explanations warrant consideration.

High Heterozygosity Due to Outcrossing

In outcrossing plant species, high levels of heterozygosity can accumulate, especially in regions with large, diverse populations.

- Molecular Mechanism

- Plants in the population cross-pollinate, leading to offspring with diverse allelic combinations.

- Over generations, multiple alleles for each gene can persist in the population.

- Individual plants may carry two or more distinct alleles for many genes.

- How it Causes Overlapping Peaks

- When sequencing a heterozygous individual, both alleles are amplified and sequenced.

- Differences between alleles result in double peaks at heterozygous positions.

- Supporting Evidence

- Consistent with a wild population in a biodiverse area.

- Might be particularly noticeable if the population has high genetic diversity.

Allopolyploidy

Allopolyploidy results from hybridization between two or more different species, followed by chromosome doubling.

- Mechanism

- Hybridization occurs between two related species (e.g., Species A: AA and Species B: BB).

- The resulting hybrid (AB) undergoes chromosome doubling to form an allopolyploid (AABB).

- The allopolyploid now contains two sets of similar but not identical genomes.

- How it Causes Overlapping Peaks

- Multiple similar copies of each gene are present in the genome.

- During PCR and sequencing, all copies are amplified and sequenced simultaneously.

- Where sequences differ between copies, multiple bases are incorporated, resulting in overlapping peaks.

- Supporting Evidence

- Consistent with the high biodiversity and presence of closely related species in the area.

- Explains the persistence of the pattern with new primers.

Q08¶

Points: 4

In Illumina sequencing, adapters are ligated to DNA fragments before sequencing.

- Explain the role of these adapters in the sequencing process.

- How might errors in adapter ligation affect the sequencing results and downstream data analysis?

- Describe how the design of these adapters helps prevent the formation of adapter dimers.

Solution

Adapters in Illumina sequencing play several crucial roles throughout the sequencing workflow:

- Enabling Bridge Amplification

- Adapters contain sequences complementary to oligonucleotides on the flow cell surface.

- This allows DNA fragments to bind to the flow cell and undergo bridge amplification.

- Priming for Sequencing

- Adapters include binding sites for sequencing primers.

- This enables the initiation of sequencing reactions for both forward and reverse reads.

- Index Sequences

- Adapters often contain index sequences (barcodes).

- These allow for multiplexing, where multiple samples can be sequenced in the same lane and later demultiplexed.

- Facilitating Paired-End Sequencing

- In paired-end sequencing, adapters at both ends of the fragment allow sequencing from both directions.

Errors in adapter ligation can have several negative effects on sequencing results and downstream analysis:

- Reduced Sequencing Efficiency

- Fragments without properly ligated adapters won't bind to the flow cell, reducing the overall yield.

- Chimeric Reads

- Improper ligation can lead to chimeric fragments where adapters join unrelated DNA fragments.

- This results in chimeric reads that can complicate assembly and alignment processes.

- Biased Representation

- Inefficient ligation can lead to under-representation of certain sequences, especially those with extreme GC content.

- This introduces bias in quantitative analyses like RNA-Seq or ChIP-Seq.

- Index Hopping

- In multiplexed samples, errors in index sequence ligation can lead to index hopping.

- This results in reads being assigned to the wrong sample during demultiplexing.

- Reduced Read Quality

- Partially ligated adapters can lead to poor quality reads, especially at the beginning or end of reads.

- Complications in Data Analysis

- Adapter contamination in reads can interfere with alignment, assembly, and variant calling.

- It necessitates more stringent quality control and adapter trimming steps in data preprocessing.

- False Positive Variant Calls

- Adapter sequences present in reads can be misinterpreted as real genomic sequence, leading to false positive variant calls.

Illumina adapters are designed with several features to minimize the formation of adapter dimers:

- Y-shaped Adapters

- Adapters are often designed with a Y-shape, where only a portion of the adapter is double-stranded.

- This reduces the likelihood of adapters ligating to each other.

- Sequence Design

- Adapter sequences are designed to minimize complementarity between different adapter molecules.

- This reduces the likelihood of base-pairing between adapters.

- PCR Suppression Effect

- Some adapter designs incorporate sequences that, when dimerized, form strong hairpin structures.

- These structures are poor substrates for PCR amplification, suppressing the amplification of adapter dimers.

Q09¶

Points: 5

Compare the structure of a typical surfactant to a phospholipid. How do these structural differences contribute to the surfactant's ability to lyse cells? Explain why increasing the concentration of surfactants in a lysis buffer might not always lead to better DNA yield. What potential problems could arise from using too much surfactant?

Solution

Structural Comparison: Surfactant vs. Phospholipid